Scaling blockchains 101 - Part 1

Hmmm, what are some practical ways to scale a blockchain network?

Introduction

The rapid growth and widespread adoption of blockchain technology have recently sparked considerable interest in its scalability. As more users' and companies' claims grow in the industry, understanding the potential scaling solutions of these networks becomes increasingly important. This article aims to provide a comprehensive introduction to blockchain scalability and dispel common misconceptions.

What to do?

Before going into any scaling solution, it's worth saying that you can not scale a blockchain network just by what it is. Reducing the block time and tuning up the block size makes the hardware requirements of running a node higher. For example, Ethereum currently has a 12-second block time and roughly 1.5 MB block size, which brings a hardware requirement of 8 GB RAM and a duo-core CPU, comparable to an average person's computer. Bitcoin, which has a 10-minute block time and a 1 MB block size, could even be run on a phone back in 2010! Now, let's take a look at Solana; it has a 0.4-second block time and a theoretically-possible 128MB block size, and here are the requirements to barely run a node for it:

Solana developers make it so that only the super-rich and datacenters can run nodes to gain performance!

For clarification, "nodes" are user nodes - a mini-server you use to sync and read data from the blockchain, not miners or validators!

Not being able to run nodes makes the network centralized as users are forced to rely on centralized node providing services like Infura, Moralis, Quicknode, etc. The only way to scale a blockchain is to move the heavy work out of it while having some connection to the chain, combined with cryptographic tricks so that even when tasks are not entirely done on the blockchain, it still works correctly and trustlessly.

Ideally, "good" scaling solutions are:

"Decentralized/Trustless" - People can perform off-chain computation on their own, or there exists a network with many participants doing that for you, and this network must provide a reasonable way for people to join in (because that network is forever centralized to only those existing participants otherwise).

"Secure" - There shouldn’t be any reasonable way for an attacker or a centralized entity to attack and manipulate the protocol. The security of the protocol remains approximately the same even if the network scales up further.

State channels & Plasma

First, we will examine the two most straightforward "layer 2" scaling solutions - state channels and plasma chains.

State channels

Given a situation: A group of people wanting to transact with each other. They can deposit assets into a smart contract on-chain, write down planned transactions, compute the result off-chain and submit one final transaction to confirm this result on-chain with agreement from other people in the group through their signatures; that’s what a state channel is about. State channels are advantageous in cases where known parties have to make multiple transactions back and forth in some period since it reduces all tasks to just one or two transactions, and every channel's transaction is done instantly due to not being on-chain but just a mutual short-term agreement between parties.

State channels are superior for large payments. They are as fast as everyday payment applications that you are familiar with but you don't have to pay for any transfers made in state channels. The only thing you are paying for is channel creation/settlement on-chain. If you make hundreds of money transfers, you pay for 1 or 2 transactions.

However, there are several drawbacks. It might not be helpful if users only make a few transactions. Since it reduces n transactions to 1 or 2 transactions, if you are making one transfer, it does not solve anything in that case. Furthermore, you are restricted to making payments across a limited group of people due to the protocol's reliance on a multi-sig to work. Suppose you expand it to a global level. In that case, attackers might not agree for everyone to withdraw their assets and the assets will be stuck. Such a system is limited to 2-20 people. It is also impossible to build many types of applications just by using channels because, again, it's limited to a group of parties, so something like a complex decentralized lending protocol that relies on global consensus is impossible.

In practice, state channels are prevalent among high-end Bitcoin users. Any serious Bitcoin user who has dug deep enough would know about Lightning Network and use it for everyday payments.

Plasma chains

Another type of scaling solution limited to a group of parties is “plasma chain”. You deposit some assets into the plasma protocol. You or any other party in the same transacting group keep transactions off-chain and post state commitment claiming the resulting state of those transactions to the layer one periodically. If any party thinks the commitment is false, they create a dispute and submit proof that it is faulty. Ultimately, you can withdraw your assets corresponding to the current state commitment by submitting your account's state.

Plasma chains open up for more applications than state channels. Take chess, for example; state channels can not be used for such a purpose because it requires both players' signatures to get the game's result on-chain. Of course, the loser can deny submitting the signature for various reasons. With plasma chains, however, all moves are recorded off-chain; you can use that to construct a proof against the faulty state commitment (if it is faulty) or create an interactive dispute with the uploader on-chain. While state channels rely on a multi-sig, plasma chains rely on provable off-chain computation.

It does suffer from one big problem, though, which is data availability; if there is a loss of transactions or unclear transaction ordering, participants might not be able to submit proof on-chain against the faulty state commitment. This is also why it's limited to a group and can not be used globally. Still, we will see a very similar model in the next part of this article.

Sharding & rollups

Another approach to scaling the blockchain is splitting it into many smaller chains, which reduces the overall computation and data storage needed for each of the chains, often called "sharding" or considered "rollups" - another type of layer two scaling solution.

How *not* to shard

Sharding is often misunderstood cause many think it is just literally splitting one chain into child chains and that’s it. This would cause colossal consensus security concerns because performing a 51% attack with one child chain’s consensus protocol is much easier. If one child chain falls, the whole network falls. Another problem might be that communication between the chains is complex without a relay.

Then how do we shard?

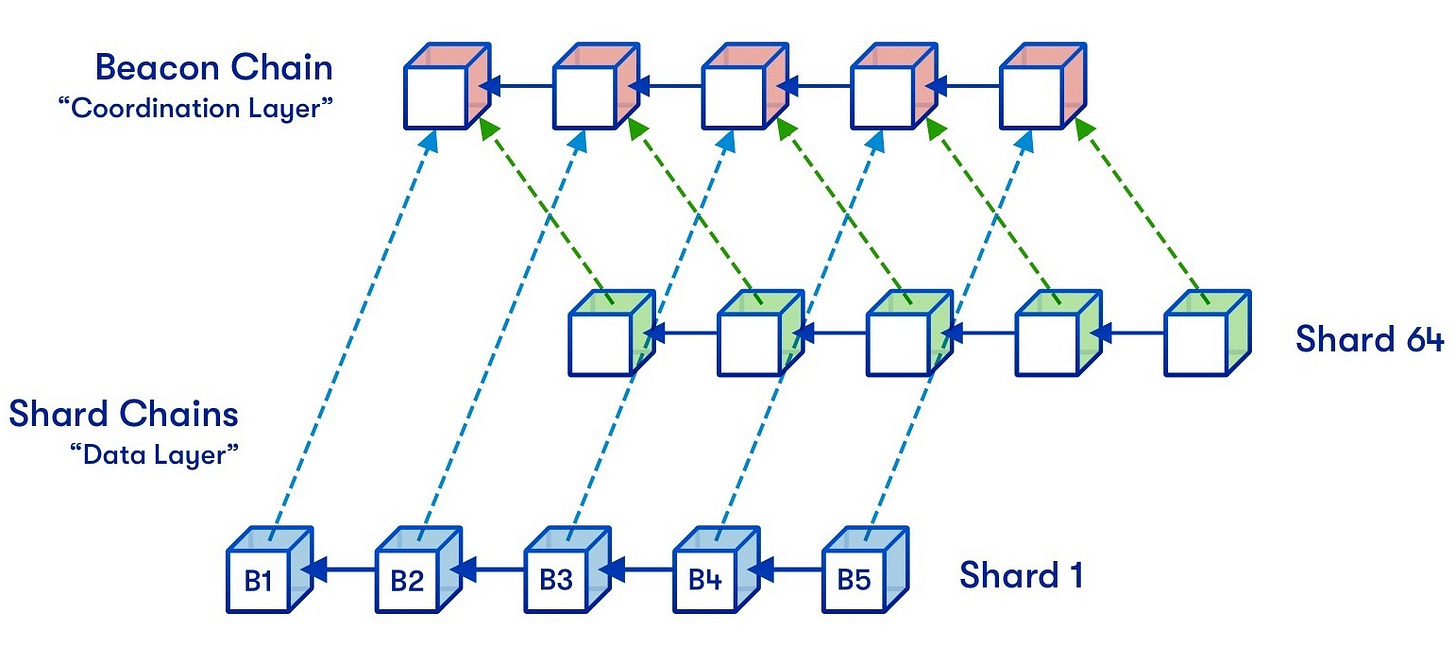

The correct way to shard the chains is to make one main chain a consensus layer and multiple child chains (or "rollups") upload their transactions onto it. As a result, no single child chain has to have its consensus protocol, which means consensus security is not reduced. But the special part about this is that child chains only care about their transactions posted on the beacon chain, have their independent state, and do nothing with other chains' data. Transactions from other chains are just there in the beacon block but ignored by them. By doing this, we have sharded transaction computation and state storage from one chain to multiple ones.

Bridging

To bridge the token from the beacon chain to the rollup and reverse, usually, there are two classes of rollups to ensure the correctness of the state - Optimistic rollups and ZK rollups. Both upload a state root as a commitment to the global rollup's state; ZK rollups will have a small-and-cheap-to-verify proof to ensure the correctness of a rollup block/state commitment uploaded, while Optimistic rollups utilize a fraud-proof system, where a rollup block/state commitment is not checked by default, until someone says that it is invalid and challenges the person who uploaded the data.

If there is not a native bridge from the rollup to layer one and reverse, these schemes are not needed. People often call this type of rollups "sovereign rollups" or "sovereign embedded chains," which are as independent as standard layer ones but do not require a new consensus protocol. However, it comes with a lousy economy due to the requirement of a new immature token, plus insecure asset migration because the bridge is not native to the rollup; developers will have to build layer 1 to layer 1 bridges.

Data sharding

Even though everything is cheaper because state access/computation/storage in each chain is lower, the amount of transactions handled by the entire network is the same since the block size is the same. We are just processing just as many transactions! We need to shard data availability (or DA for short) to scale up.

We will introduce special pieces of data on the beacon block called "blobs" and the cryptographic commitment of each of these blobs stored on each block. Rollups will upload their transaction batches as these blobs. The unique thing about this is that nodes will verify these blobs once against their commitment and then drop them, storing only the commitment. Rollup nodes (or some other 3rd-party) store their rollup’s blobs and ignore others, but they can still request those blobs from other rollup nodes if they want to and verify them using the commitment stored.

Complex cryptographic tricks can also be used so that you would not need to verify all blobs. Nodes just need to receive a part of the data which can prove that it knows its part of the data. With that complicated cryptography, you can provide guarantees that the network has the data available without forcing any individual node to have the data available.

This is also where it is different from just increasing the block size; by increasing the block size, everyone has to store all of the big blocks and process all transactions. In this case, separate child chains deal with their small blocks only and add more throughput to the whole network when combined.

Through rollups and data sharding, transaction throughput will increase since more transactions can be handled without sacrificing huge computation costs. Though, there are still the one-time data transportation cost and the cost to verify commitments, so we can only shard data availability to an extent.

Part 2

In the next part, we are going to discover additional tricks to enhance the capability of rollups, some other minor approaches to scale the blockchain, some other misconceptions and a quick recap.

Link to part 2: https://cryptodevs.substack.com/p/scaling-blockchains-101-part-2?sd=pf